先由slub分配算法初始化进入分析。

回到mm_init()函数中,在调用mem_init()初始化伙伴管理算法后,紧接着调用的kmem_cache_init()便是slub分配算法的入口。其中该函数在/mm目录下有三处实现slab.c、slob.c和slub.c,表示不同算法下其初始化各异,分析slub分配算法则主要分析slub.c的实现。

该函数具体实现:

【file:/mm/slub.c】

void __init kmem_cache_init(void)

{

static __initdata struct kmem_cache boot_kmem_cache,

boot_kmem_cache_node;

if (debug_guardpage_minorder())

slub_max_order = 0;

kmem_cache_node = &boot_kmem_cache_node;

kmem_cache = &boot_kmem_cache;

create_boot_cache(kmem_cache_node, "kmem_cache_node",

sizeof(struct kmem_cache_node), SLAB_HWCACHE_ALIGN);

register_hotmemory_notifier(&slab_memory_callback_nb);

/* Able to allocate the per node structures */

slab_state = PARTIAL;

create_boot_cache(kmem_cache, "kmem_cache",

offsetof(struct kmem_cache, node) +

nr_node_ids * sizeof(struct kmem_cache_node *),

SLAB_HWCACHE_ALIGN);

kmem_cache = bootstrap(&boot_kmem_cache);

/*

* Allocate kmem_cache_node properly from the kmem_cache slab.

* kmem_cache_node is separately allocated so no need to

* update any list pointers.

*/

kmem_cache_node = bootstrap(&boot_kmem_cache_node);

/* Now we can use the kmem_cache to allocate kmalloc slabs */

create_kmalloc_caches(0);

#ifdef CONFIG_SMP

register_cpu_notifier(&slab_notifier);

#endif

printk(KERN_INFO

"SLUB: HWalign=%d, Order=%d-%d, MinObjects=%d,"

" CPUs=%d, Nodes=%d\n",

cache_line_size(),

slub_min_order, slub_max_order, slub_min_objects,

nr_cpu_ids, nr_node_ids);

}

浏览该函数整体结构,很容易就可以辨认出来register_hotmemory_notifier()和register_cpu_notifier()主要是用于注册内核通知链回调的;除此之外,主要涉及的函数分别为create_boot_cache()、bootstrap()和create_kmalloc_caches()。为了了解具体实现,逐一分析这三个函数。

首先是create_boot_cache():

【file:/mm/slub.c】

/* Create a cache during boot when no slab services are available yet */

void __init create_boot_cache(struct kmem_cache *s, const char *name, size_t size,

unsigned long flags)

{

int err;

s->name = name;

s->size = s->object_size = size;

s->align = calculate_alignment(flags, ARCH_KMALLOC_MINALIGN, size);

err = __kmem_cache_create(s, flags);

if (err)

panic("Creation of kmalloc slab %s size=%zu failed. Reason %d\n",

name, size, err);

s->refcount = -1; /* Exempt from merging for now */

}

该函数用于创建分配算法缓存,主要是把boot_kmem_cache_node结构初始化了。其内部的calculate_alignment()主要用于计算内存对齐值,而__kmem_cache_create()则是创建缓存的核心函数,其主要是把kmem_cache结构初始化了。具体的__kmem_cache_create()实现将在后面的slab创建部分进行详细分析。

至此,create_boot_cache()函数创建kmem_cache_node对象缓冲区完毕,往下register_hotmemory_notifier()注册内核通知链回调之后,同样是通过create_boot_cache()创建kmem_cache对象缓冲区。接续往下走,可以看到bootstrap()函数调用,bootstrap(&boot_kmem_cache)及bootstrap(&boot_kmem_cache_node)。

bootstrap()函数主要是将临时kmem_cache向最终kmem_cache迁移,并修正相关指针,使其指向最终的kmem_cache。具体实现:

【file:/mm/slub.c】

/********************************************************************

* Basic setup of slabs

*******************************************************************/

/*

* Used for early kmem_cache structures that were allocated using

* the page allocator. Allocate them properly then fix up the pointers

* that may be pointing to the wrong kmem_cache structure.

*/

static struct kmem_cache * __init bootstrap(struct kmem_cache *static_cache)

{

int node;

struct kmem_cache *s = kmem_cache_zalloc(kmem_cache, GFP_NOWAIT);

memcpy(s, static_cache, kmem_cache->object_size);

/*

* This runs very early, and only the boot processor is supposed to be

* up. Even if it weren't true, IRQs are not up so we couldn't fire

* IPIs around.

*/

__flush_cpu_slab(s, smp_processor_id());

for_each_node_state(node, N_NORMAL_MEMORY) {

struct kmem_cache_node *n = get_node(s, node);

struct page *p;

if (n) {

list_for_each_entry(p, &n->partial, lru)

p->slab_cache = s;

#ifdef CONFIG_SLUB_DEBUG

list_for_each_entry(p, &n->full, lru)

p->slab_cache = s;

#endif

}

}

list_add(&s->list, &slab_caches);

return s;

}

首先将会通过kmem_cache_zalloc()申请kmem_cache空间,值得注意的是该函数申请调用kmem_cache_zalloc()->kmem_cache_alloc()->slab_alloc(),其最终将会通过前面create_boot_cache()初始化创建的kmem_cache来申请slub空间来使用;继而将bootstrap()入参的kmem_cache结构数据memcpy()至申请的空间中,再接着会__flush_cpu_slab()刷新cpu的slab信息;然后回通过for_each_node_state()遍历各个内存管理节点node,在通过get_node()获取对应节点的slab,如果slab不为空这回遍历部分满slab链,修正每个slab指向kmem_cache的指针,如果开启CONFIG_SLUB_DEBUG,则会遍历满slab链,设置每个slab指向kmem_cache的指针;最后将kmem_cache添加到全局slab_caches链表中。

由此可以看到linux内核代码的精简设计,通过临时空间创建初始化了slab的管理框架,然后再将临时空间的数据迁移至管理框架中,实现高度自管理,不轻易浪费丝毫内存空间。

kmem_cache_init()函数内再往bootstrap()下走则是create_kmalloc_caches():

【file:/mm/slab_common.c】

/*

* Create the kmalloc array. Some of the regular kmalloc arrays

* may already have been created because they were needed to

* enable allocations for slab creation.

*/

void __init create_kmalloc_caches(unsigned long flags)

{

int i;

/*

* Patch up the size_index table if we have strange large alignment

* requirements for the kmalloc array. This is only the case for

* MIPS it seems. The standard arches will not generate any code here.

*

* Largest permitted alignment is 256 bytes due to the way we

* handle the index determination for the smaller caches.

*

* Make sure that nothing crazy happens if someone starts tinkering

* around with ARCH_KMALLOC_MINALIGN

*/

BUILD_BUG_ON(KMALLOC_MIN_SIZE > 256 ||

(KMALLOC_MIN_SIZE & (KMALLOC_MIN_SIZE - 1)));

for (i = 8; i < KMALLOC_MIN_SIZE; i += 8) {

int elem = size_index_elem(i);

if (elem >= ARRAY_SIZE(size_index))

break;

size_index[elem] = KMALLOC_SHIFT_LOW;

}

if (KMALLOC_MIN_SIZE >= 64) {

/*

* The 96 byte size cache is not used if the alignment

* is 64 byte.

*/

for (i = 64 + 8; i <= 96; i += 8)

size_index[size_index_elem(i)] = 7;

}

if (KMALLOC_MIN_SIZE >= 128) {

/*

* The 192 byte sized cache is not used if the alignment

* is 128 byte. Redirect kmalloc to use the 256 byte cache

* instead.

*/

for (i = 128 + 8; i <= 192; i += 8)

size_index[size_index_elem(i)] = 8;

}

for (i = KMALLOC_SHIFT_LOW; i <= KMALLOC_SHIFT_HIGH; i++) {

if (!kmalloc_caches[i]) {

kmalloc_caches[i] = create_kmalloc_cache(NULL,

1 << i, flags);

}

/*

* Caches that are not of the two-to-the-power-of size.

* These have to be created immediately after the

* earlier power of two caches

*/

if (KMALLOC_MIN_SIZE <= 32 && !kmalloc_caches[1] && i == 6)

kmalloc_caches[1] = create_kmalloc_cache(NULL, 96, flags);

if (KMALLOC_MIN_SIZE <= 64 && !kmalloc_caches[2] && i == 7)

kmalloc_caches[2] = create_kmalloc_cache(NULL, 192, flags);

}

/* Kmalloc array is now usable */

slab_state = UP;

for (i = 0; i <= KMALLOC_SHIFT_HIGH; i++) {

struct kmem_cache *s = kmalloc_caches[i];

char *n;

if (s) {

n = kasprintf(GFP_NOWAIT, "kmalloc-%d", kmalloc_size(i));

BUG_ON(!n);

s->name = n;

}

}

#ifdef CONFIG_ZONE_DMA

for (i = 0; i <= KMALLOC_SHIFT_HIGH; i++) {

struct kmem_cache *s = kmalloc_caches[i];

if (s) {

int size = kmalloc_size(i);

char *n = kasprintf(GFP_NOWAIT,

"dma-kmalloc-%d", size);

BUG_ON(!n);

kmalloc_dma_caches[i] = create_kmalloc_cache(n,

size, SLAB_CACHE_DMA | flags);

}

}

#endif

}

函数入口处的BUILD_BUG_ON()主要是做检查,保证kmalloc允许的最小对象大小不能大于256,且该值必须是2的整数幂;接着的for循环,主要是对大小在8byte与KMALLOC_MIN_SIZE之间的对象,将其在size_index数组的索引设置为KMALLOC_SHIFT_LOW;接着的KMALLOC_MIN_SIZE与64及128的比较判断分支则主要是对64byte至96byte及128byte至192byte之间的对象,将其在size_index数组的索引值进行设置;而对于slub分配算法而言,KMALLOC_MIN_SIZE为1 << KMALLOC_SHIFT_LOW,其中KMALLOC_SHIFT_LOW为3,则KMALLOC_MIN_SIZE为8,故上述几个分支都不会进入,即size_index定义数据未变,仍为其在/mm/slab_common.c的原始定义数值;再往下至KMALLOC_SHIFT_LOW到KMALLOC_SHIFT_HIGH的for循环主要是调用create_kmalloc_cache()来初始化kmalloc_caches表,其最终创建的kmalloc_caches是以{0,96,192,8,16,32,64,128,256,512,1024,2046,4096,8196}为大小的slab表;创建完之后,将设置slab_state为UP,然后将kmem_cache的name成员进行初始化;最后如果配置了CONFIG_ZONE_DMA,将会初始化创建kmalloc_dma_caches表。

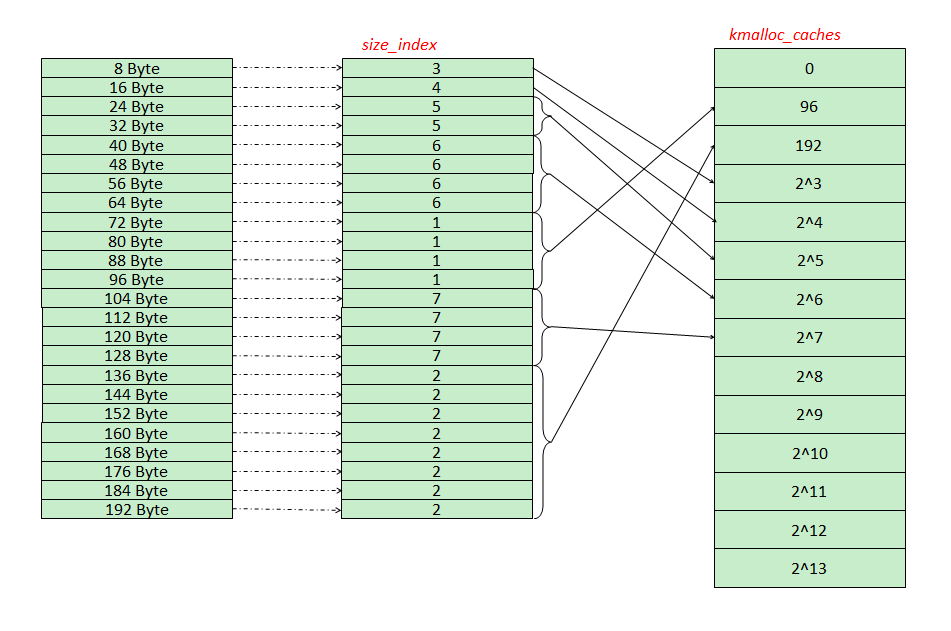

根据这里的信息,可以得到size_index与kmalloc_caches的对应关系:

进而分析create_kmalloc_cache()的实现:

【file:/mm/slab_common.c】

struct kmem_cache *__init create_kmalloc_cache(const char *name, size_t size,

unsigned long flags)

{

struct kmem_cache *s = kmem_cache_zalloc(kmem_cache, GFP_NOWAIT);

if (!s)

panic("Out of memory when creating slab %s\n", name);

create_boot_cache(s, name, size, flags);

list_add(&s->list, &slab_caches);

s->refcount = 1;

return s;

}

其先经kmem_cache_zalloc()申请一个kmem_cache对象,完了create_boot_cache()创建slab并将其添加到slab_caches列表中。而create_boot_cache()的实现:

【file:/mm/slab_common.c】

/* Create a cache during boot when no slab services are available yet */

void __init create_boot_cache(struct kmem_cache *s, const char *name, size_t size,

unsigned long flags)

{

int err;

s->name = name;

s->size = s->object_size = size;

s->align = calculate_alignment(flags, ARCH_KMALLOC_MINALIGN, size);

err = __kmem_cache_create(s, flags);

if (err)

panic("Creation of kmalloc slab %s size=%zu failed. Reason %d\n",

name, size, err);

s->refcount = -1; /* Exempt from merging for now */

}

很清楚可以看到该函数主要是通过__kmem_cache_create()来创建各种大小的slab以满足后期内存分配时使用。

至此,Slub分配框架初始化完毕。稍微总结一下kmem_cache_init()函数流程,该函数首先是create_boot_cache()创建kmem_cache_node对象的slub管理框架,然后register_hotmemory_notifier()注册热插拔内存内核通知链回调函数用于热插拔内存处理;值得关注的是此时slab_state设置为PARTIAL,表示将分配算法状态改为PARTIAL,意味着已经可以分配kmem_cache_node对象了;再往下则是create_boot_cache()创建kmem_cache对象的slub管理框架,至此整个slub分配算法所需的管理结构对象的slab已经初始化完毕;不过由于前期的管理很多都是借用临时变量空间的,所以将会通过bootstrap()将kmem_cache_node和kmem_cache的管理结构迁入到slub管理框架的对象空间中,实现自管理;最后就是通过create_kmalloc_caches()初始化一批后期内存分配中需要使用到的不同大小的slab缓存。